How 10 Prompts Replaced an Afternoon of Coding and a $100 Subscription

The $0.13 Workflow That Beat Otter.ai at Its Own Game

TL;DR

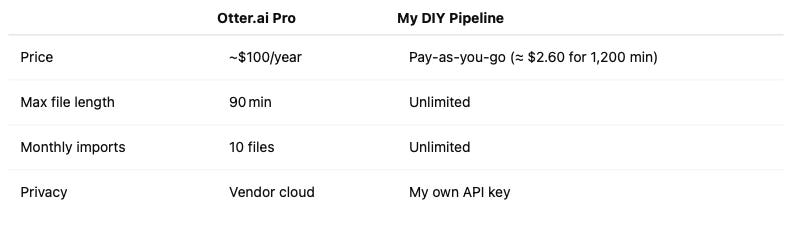

Problem: I've been using voice memos on my Apple Watch to capture ideas, todos, even journaling. I wanted to use Otter.ai but its file upload limits and length limits are aggressive, and I don't want another app.

Solution: In ten prompts, I had an GPT o4 write a Python script that transcribes any length memo, drops a summary + actionable to‑dos, and saves everything to Obsidian.

Cost: ~ $0.13 to process a 50 MB / 60‑minute file—about 40× cheaper than Otter (and without any limits on number of files transcribed or their length).

Next up: Auto‑detect new memos, kick off the pipeline instantly, and forget I ever touched a manual upload button.

The Moment of Friction 🤔

I’m that person who narrates half‑baked ideas into my wrist whilst crossing Market Street. Those snippets disappear into a black hole until I transcribe them—which almost never happens. Otter looked promising, but the math didn’t.

Otter’s features are slick, but I really just need clean text + a quick action list.

Building the Tool (While Working on Something Else)

Prompt #1: “I want to be able to transcribe mp4 files to text transcripts using OpenAI's APIs. Please write me a python script that is run on a mac at the command line, where it receives a local mp4 as an argument.”

Prompt #2: “please add some realtime status indication so I know what's happening. it just sits there silently at the commandline after calling it. progress bars please.”

Prompt #3: “great. Can you change this to return SRT format, and then take the output and give it to GPT-4o and ask it to return a better formatted (paragraphs) output?”

…

Prompt #10: “Perfect.

Now, I like this final transcript (that might actually have been sent to multiple 4o instances and then aggregated). Once it's been assembled, send this final aggregated transcript to 4o one final time, and generate (and label with markdown headers) a Summary and Next Steps section at the bottom. The summary should be a single paragraph, and the Next Steps should include whatever look like action items, to dos, followups, etc. in markdown format ("- [ ]").”

Total wall‑clock time: about an hour, maybe only 10 of which actually focused on this particular project. o4 did all the lifting; I just nudged.

$ python transcribe_mp4.py ~/VoiceMemos/2025‑06‑07‑idea.m4a

Transcribing…

Done! → 2025‑06‑07‑idea.md

The Economics 🤑

OpenAI Whisper: $0.006 / min → ≈ $0.36 / hour of audio.

GPT‑4o summarization: 1.5k input tokens + 300 output tokens ≈ $0.10.

Even being generous, that’s $0.46 per hour. My real world experiment with a 50mb/60 minute file cost $0.13. Not quite sure how that worked out. Compare that to Otter’s cap of 1,200 monthly minutes at $100/yr—it isn’t even a contest.

Bigger Picture: SaaS, Meet Your Hungry LLM

Two takeaways keep ringing in my head:

LLMs as Swiss Army devs. Ten prompts replaced an afternoon of coding—and an annual SaaS subscription. The marginal cost of small, purpose‑built tools is collapsing.

APIs > Bundles. When the core commodity (transcription + summarization) is a cheap API call, feature‑heavy SaaS wrappers start to look… squishy.

I like Otter; they’re solving a real problem. But for my narrow use‑case—and probably thousands like it—the DIY route is faster, cheaper, and keeps my data where I want it: with me.

Try It Yourself

Grab the script, swap in your own OpenAI key, and point it at an audio file. That’s it. If you improve on the workflow, let me know—nerd brain wants to see what you build.

(Written in Obsidian, powered by a wrist‑tap, 10 prompts, and a $0.13 bill.)

#!/usr/bin/env python3

"""

Transcribe media files to SRT using OpenAI Whisper API (v1+), auto-splitting large media files, showing upload progress, formatting into paragraphs via GPT-4o, and finally generating a markdown Summary and Next Steps.

Usage:

$ python transcribe_mp4.py /path/to/input.mp4 \

[-o /path/to/output.txt] [--save-srt SRT_PATH] [--segment-length SECONDS]

Dependencies:

pip install openai requests tqdm requests-toolbelt

ffmpeg must be installed and on your PATH

Configuration:

export OPENAI_API_KEY="your_api_key_here"

"""

import argparse

import os

import sys

import subprocess

import tempfile

import glob

import requests

from requests_toolbelt.multipart.encoder import MultipartEncoder, MultipartEncoderMonitor

from tqdm import tqdm

from openai import OpenAI

API_URL = "https://api.openai.com/v1/audio/transcriptions"

MAX_BYTES = 25 * 1024 * 1024 # ~25 MiB upload limit

MAX_CHUNK_CHARS = 15000 # approx char limit per GPT-4o request

# Initialize OpenAI client

api_key = os.getenv("OPENAI_API_KEY")

if not api_key:

sys.exit("Error: Environment variable OPENAI_API_KEY not set.")

client = OpenAI(api_key=api_key)

def transcribe_single(file_path: str, model: str) -> str:

"""

Upload and transcribe one media file chunk with progress bar, return SRT text.

"""

filename = os.path.basename(file_path)

encoder = MultipartEncoder(

fields={

'file': (filename, open(file_path, 'rb'), 'application/octet-stream'),

'model': model,

'response_format': 'srt'

}

)

def progress_callback(monitor):

bar.update(monitor.bytes_read - bar.n)

headers = {'Authorization': f'Bearer {api_key}', 'Content-Type': encoder.content_type}

with tqdm(total=encoder.len, unit='B', unit_scale=True, desc=f'Uploading {filename}') as bar:

monitor = MultipartEncoderMonitor(encoder, progress_callback)

resp = requests.post(API_URL, data=monitor, headers=headers)

if not resp.ok:

raise RuntimeError(f"Transcription failed ({resp.status_code}): {resp.text}")

return resp.text.strip()

def transcribe_file(file_path: str, model: str, segment_length: int) -> str:

"""

Transcribe full file to combined raw SRT, splitting if too large.

"""

size = os.path.getsize(file_path)

if size <= MAX_BYTES:

return transcribe_single(file_path, model)

print(f"File {size/1024/1024:.1f}MiB exceeds {MAX_BYTES/1024/1024:.1f}MiB; splitting into {segment_length}s segments...")

transcripts = []

with tempfile.TemporaryDirectory() as tmp:

pattern = os.path.join(tmp, 'seg%03d.mp4')

cmd = ['ffmpeg', '-hide_banner', '-loglevel', 'error', '-i', file_path,

'-f', 'segment', '-segment_time', str(segment_length),

'-reset_timestamps', '1', '-c', 'copy', pattern]

subprocess.check_call(cmd)

segs = sorted(glob.glob(os.path.join(tmp, 'seg*.mp4')))

for idx, seg in enumerate(segs, 1):

print(f"\nSegment {idx}/{len(segs)}: {os.path.basename(seg)}")

transcripts.append(transcribe_single(seg, model))

return "\n\n".join(transcripts)

def chunk_srt(srt: str, max_chars: int) -> list:

"""

Split raw SRT text into chunks <= max_chars, preserving block boundaries.

"""

blocks = srt.strip().split("\n\n")

chunks, current, count = [], [], 0

for blk in blocks:

length = len(blk) + 2

if count + length > max_chars and current:

chunks.append("\n\n".join(current))

current, count = [], 0

current.append(blk)

count += length

if current:

chunks.append("\n\n".join(current))

return chunks

def format_large_srt(raw_srt: str) -> str:

"""

Format large SRT via chunked GPT-4o calls, return full formatted transcript.

"""

chunks = chunk_srt(raw_srt, MAX_CHUNK_CHARS)

formatted = []

for i, chunk in enumerate(chunks, 1):

print(f"Formatting chunk {i}/{len(chunks)} with GPT-4o...")

system_prompt = ("You are an assistant that converts SRT subtitles into a clean, readable transcript."

" Remove timestamps and group sentences into paragraphs.")

user_prompt = f"Convert the following SRT into plain transcript paragraphs:\n\n{chunk}"

resp = client.chat.completions.create(

model="gpt-4o",

messages=[

{"role": "system", "content": system_prompt},

{"role": "user", "content": user_prompt}

]

)

formatted.append(resp.choices[0].message.content.strip())

return "\n\n".join(formatted)

def generate_summary_and_next_steps(formatted_text: str) -> str:

"""

Send full transcript to GPT-4o to generate markdown Summary and Next Steps.

"""

print("Generating summary and next steps with GPT-4o...")

system_prompt = ("You are an assistant that reads a transcript and produces two markdown-labeled sections:"

" '## Summary' (one concise paragraph) and '## Next Steps' (bullet list of action items as '- [ ]').")

user_prompt = (

"Please analyze the following transcript and generate a '## Summary' section with a single paragraph summarizing its contents,"

" then a '## Next Steps' section listing actionable follow-ups using markdown task syntax ('- [ ]').\n\n"

+ formatted_text

)

resp = client.chat.completions.create(

model="gpt-4o",

messages=[

{"role": "system", "content": system_prompt},

{"role": "user", "content": user_prompt}

]

)

return resp.choices[0].message.content.strip()

def main():

parser = argparse.ArgumentParser(

description="Transcribe media → SRT → formatted transcript → summary & next steps, splitting large files as needed.")

parser.add_argument("input_file", help="Path to audio/video file.")

parser.add_argument("-o", "--output_file", help="Save final output here.")

parser.add_argument("--save-srt", help="Optional path to save raw SRT.")

parser.add_argument("--model", default="whisper-1", help="Whisper model name.")

parser.add_argument("--segment-length", type=int, default=300,

help="Segment duration in seconds for splitting.")

args = parser.parse_args()

try:

raw_srt = transcribe_file(args.input_file, args.model, args.segment_length)

except Exception as e:

print(f"Error during transcription: {e}", file=sys.stderr)

sys.exit(1)

if args.save_srt:

try:

with open(args.save_srt, 'w', encoding='utf-8') as f:

f.write(raw_srt)

print(f"Raw SRT saved to: {args.save_srt}")

except Exception as e:

print(f"Failed saving SRT: {e}", file=sys.stderr)

# Format transcript into paragraphs

try:

formatted = format_large_srt(raw_srt)

except Exception as e:

print(f"Error during formatting: {e}", file=sys.stderr)

sys.exit(1)

# Generate summary and next steps

try:

summary_next = generate_summary_and_next_steps(formatted)

except Exception as e:

print(f"Error generating summary/next steps: {e}", file=sys.stderr)

sys.exit(1)

final_output = f"{formatted}\n\n{summary_next}"

if args.output_file:

try:

with open(args.output_file, 'w', encoding='utf-8') as f:

f.write(final_output)

print(f"Final output saved to: {args.output_file}")

except Exception as e:

print(f"Failed writing final output: {e}", file=sys.stderr)

sys.exit(1)

else:

print(final_output)

if __name__ == "__main__":

main()